Why 80% of Proxmox High Availability Setups Fail (And How to Build One That Won't)

Proxmox's High Availability (HA) feature offers a powerful promise: when a server fails, your virtual machines (VMs) restart automatically on another machine. It’s the key to business continuity, and for any IT professional responsible for uptime, it’s the key to sleeping peacefully at night.

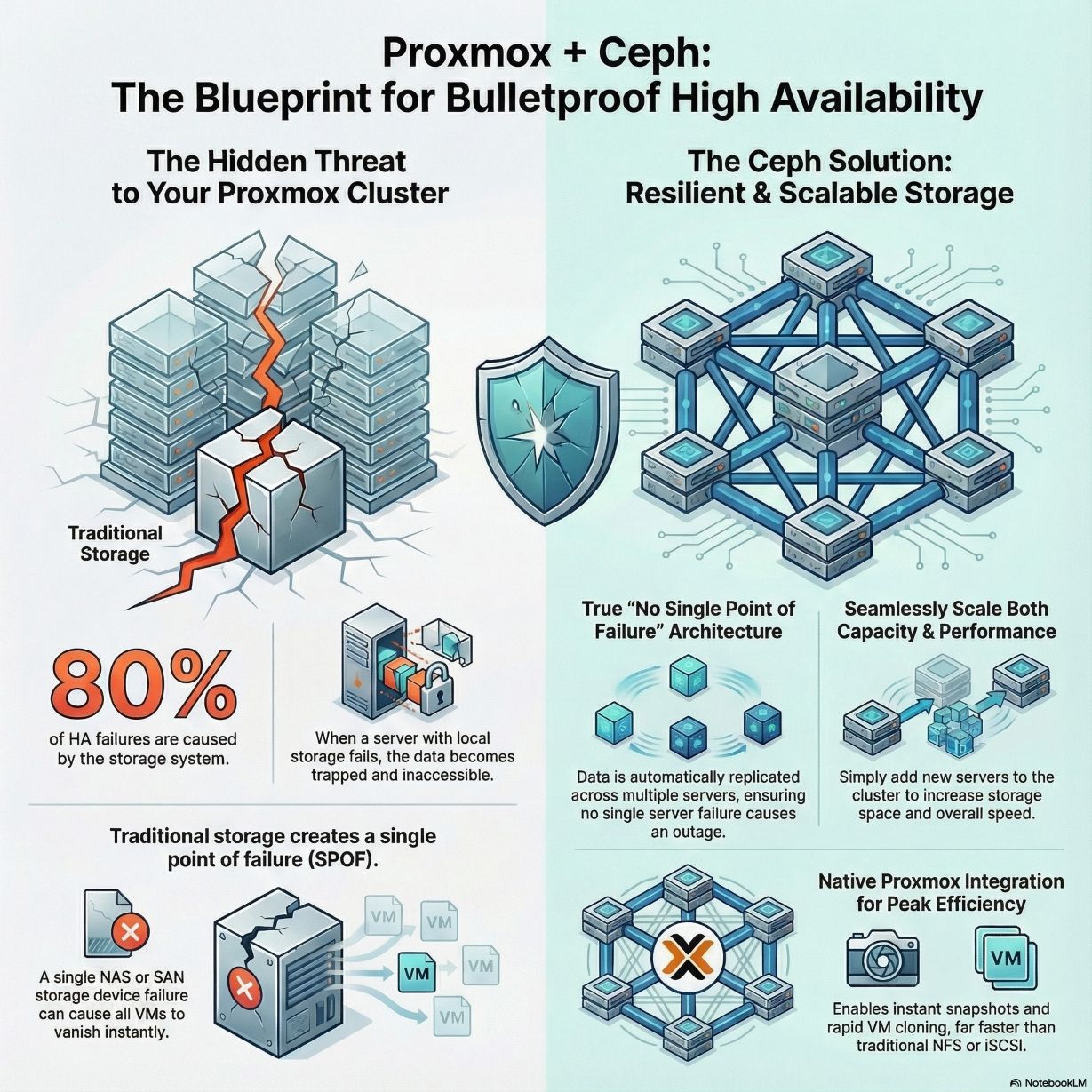

But based on my 20 years of hands-on experience designing these systems, I’ve seen that promise shatter time and again. A critical, counter-intuitive problem exists: 80% of HA failures are not caused by the compute nodes themselves. The real culprit is the storage system. Whether your data is locked on a failed server's local disk or your entire cluster depends on a single traditional NAS or even a dual-controller SAN, the result is the same: a single point of failure that can completely undermine your HA strategy.

This article will show you how to solve this critical weakness by fitting in the final piece of the HA puzzle: a distributed storage system like Ceph that lets you finally build an infrastructure that won't let you down.

Takeaway 1: Your Real Point of Failure Isn't What You Think

There is a common misconception that High Availability is primarily about having redundant compute servers. While server redundancy is essential, my experience shows the vast majority of HA failures — a staggering 80% — stem from storage.

The reason is simple: if the data itself isn't available, the HA mechanism is useless. If a VM's data is on a failed server's local disk, that data is locked on the dead machine, and Proxmox can do nothing. If you use a single traditional storage appliance like a NAS or SAN, and that one appliance fails, every VM in your entire cluster goes down instantly.

This is the definition of a "single point of failure," a critical weakness that makes an otherwise robust HA cluster surprisingly fragile.

Takeaway 2: Traditional "Shared Storage" Is Often a Scaling Trap

Many businesses use traditional shared storage—connecting their Proxmox cluster to a NAS or SAN via NFS or iSCSI. While this architecture might seem adequate at first, my experience shows it's a trap waiting to be sprung on any growing business, creating two core weaknesses.

- It's still a single point of failure:If that single storage appliance goes down, your entire Proxmox cluster fails. Even dual-controller SANs can represent a single failure domain. While controllers are redundant, the chassis, backplane, or software itself can still fail, taking the entire array—and your whole Proxmox cluster—down with it.

- It's difficult and expensive to scale: When you run out of capacity or performance, the only option is often a costly "rip and replace" project to buy a bigger, more powerful machine. This is a significant roadblock for growth.

Takeaway 3: True Resilience Means Scaling Out, Not Just Up

To solve the storage problem, Proxmox natively integrates a powerful solution: the Ceph distributed storage system. It eliminates the single point of failure and provides a path for seamless growth. It offers three superior advantages that make it the winning choice for enterprise deployments.

- No Single Point of Failure: Ceph distributes and replicates data across multiple servers. This isn't theoretical. You can literally walk up to a server in the cluster and pull its power cord. The VMs that were running on it will automatically migrate and continue running on other nodes—often without even restarting—using a complete data replica that already exists elsewhere. This is true enterprise-grade HA.

- Powerful Horizontal Scaling: In the world of Ceph, when you run out of space or performance, the solution is beautifully simple: just add a new server, connect it to the network, and join it to the cluster. Ceph automatically rebalances the data, and the new node contributes to both the total storage pool and the overall performance of the system.

- Native Proxmox Integration: Proxmox communicates with Ceph natively via RBD (RADOS Block Device), a direct block-level protocol that is far more efficient than network file system protocols like NFS or iSCSI. This tight integration enables powerful features like instant snapshots and the ability to clone new VMs almost instantaneously.

Takeaway 4: Hyper-Converged is Convenient, But Comes with a Performance "Tax"

Once you decide on Ceph, the next question is implementation: Hyper-Converged Infrastructure (HCI) or a separate, independent storage cluster?

The HCI approach runs both Proxmox compute and Ceph storage on the same servers. It's cost-effective and simpler to manage, making it an ideal choice for small-to-medium clusters of 3 to 10 nodes.

However, HCI comes with a hidden "performance tax" caused by resource contention. Ceph background operations, like data rebalancing after a failure, can consume significant CPU and network bandwidth, potentially slowing down the VMs running on the same hardware. Furthermore, the Ceph management features within the Proxmox web interface are not exhaustive. While they cover Block Storage and CephFS well, implementing advanced enterprise features like S3 object storage or NVMe-oF often requires dropping down to the command line (CLI), a key consideration for teams without deep Ceph expertise.

In contrast, an independent cluster separates compute (Proxmox) and storage (Ceph) into dedicated servers. This provides stable, predictable performance because storage and compute resources never interfere. It also offers clear fault isolation and greater flexibility to use the Ceph cluster for other enterprise needs, like S3 object storage.

Conclusion: Build Your Infrastructure on a Solid Foundation

To achieve true, enterprise-grade high availability with Proxmox, you must first solve the storage problem with a distributed system like Ceph. Relying on a single traditional storage appliance leaves you exposed to a single point of failure that invalidates your entire HA strategy.

The recommended path is to start with a cost-effective HCI model. As your business and data needs grow, plan to evolve to an independent cluster to ensure stable performance and scalability. By fitting in that final piece of the puzzle, you build an infrastructure that is truly resilient, so you can finally sleep peacefully at night.

"Storage is the foundation of IT infrastructure."

Is the foundation of your IT infrastructure built to last, or is it resting on a single point of failure?