How does Ceph cache tiering perform?

Introduction:

In this post, we share a benchmark test to demonstrate how Ceph cache tiering can improve the performance of an HDD pool by setting a cache tier backed by an NVMe pool.

What is Ceph Cache Tier and How It Works

The Ceph cache tier allows faster storage devices to be used as a cache for slower ones. This involves creating a pool of fast/expensive storage devices (such as NVMe SSDs) configured to act as a cache tier, and a slower/cheaper backing pool of either erasure-coded or slower devices (such as HDDs) configured to act as an economical storage tier. The cache tier stores frequently accessed data from the backing tier and serves read and write requests from clients. The cache tiering agent periodically flushes or evicts objects from the cache tier based on certain policies.

Ceph Cache Tier Demo

In the past, when using the SATA SSD as the cache tier storage device, the performance improvement of using the cache tiering was insignificant. Nowadays, the cost of NVMe SSD has dropped a lot compared to several years ago, and the performance of NVMe SSD is far faster than HDD. We want to know if using the NVMe SSD as the cache tier can greatly help an HDD pool.

To test the efficacy of the NVMe cache tier, we set up a test to see if the cache tier improved the performance of an HDD-based storage pool.

Cluster Setup

| NVME Hosts | 3 x Ambedded Mars500 Ceph Appliances |

| Specification of each Mars 500 appliance | |

| CPU | 1x Ampere Altra Arm 64-Core 3.0 Ghz |

| Memory | 96 GiB DDR4 |

| Network | 2 ports 25Gbps Mellanox ConnectX-6 |

| OSD Drives | 8 x Micron 7400 960GB |

| HDD Hosts | 3 x Ambedded Mars400 Ceph Appliances |

| Specification of each Mars 400 appliance | |

| CPU | 8 nodes Quad-Cores Arm64 1.2 GHz |

| Memory | 4GiB per node. 32 GiB per appliance |

| Network | 2 x 2.5Gbps per node. 2x 10 Gb uplink via in-chassis switch. |

| OSD Drives | 8 x 6 TB Seagate Exos HDD |

Ceph Cluster information

- 24 x OSD on NVMe SSD (3x Ambedded Mars500 appliances)

- 24x OSD on HDD (3x Ambedded Mars400 appliances)

- HDD and NVMe servers are located in separated CRUSH roots.

Test Clients

- 2 x physical servers. 2x 25Gb network card

- Each server runs 7x VMs.

- Each VM has 4x core and 8 GB memory

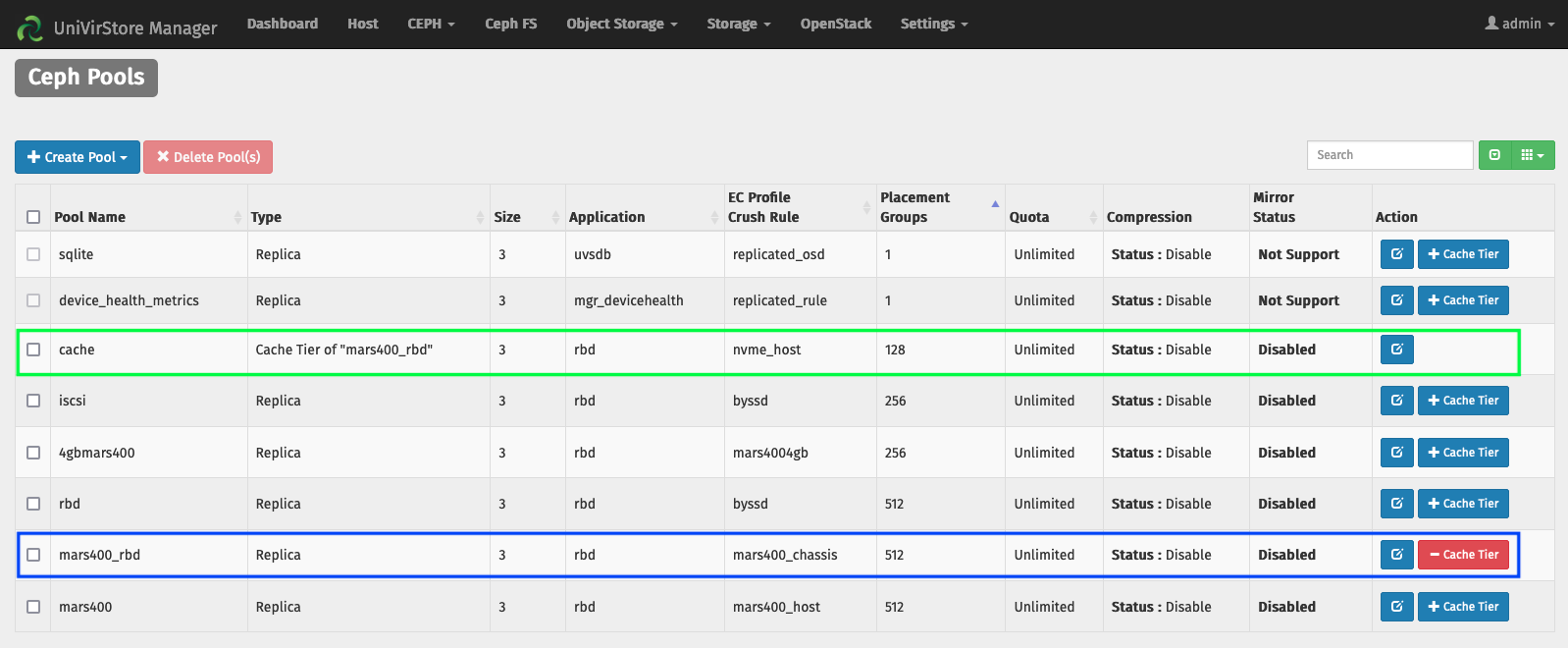

Setup the Cache Tier by Ambedded UVS manager

1. Create a base pool by using the HDD osd.

2. Create an NVMe pool using the NVMe SSD osd.

3. Add the NVMe pool as the cache tier of the HDD pool.

the

default cache tier configurations:

- Cache mode: writeback

- hit_set_count = 12

- hit_set_period = 14400 sec (4 hours)

- target_max_byte = 2 TiB

- target_max_objects = 1 million

- min_read_recency_for_promote & min_write_recency_for_promote = 2

- cache_target_dirty_ratio = 0.4

- cache_target_dirty_high_ratio = 0.6

- cache_target_full_ratio = 0.8

- cache_min_flush_age = 600 sec.

- cache_min_evict_age = 1800 sec.

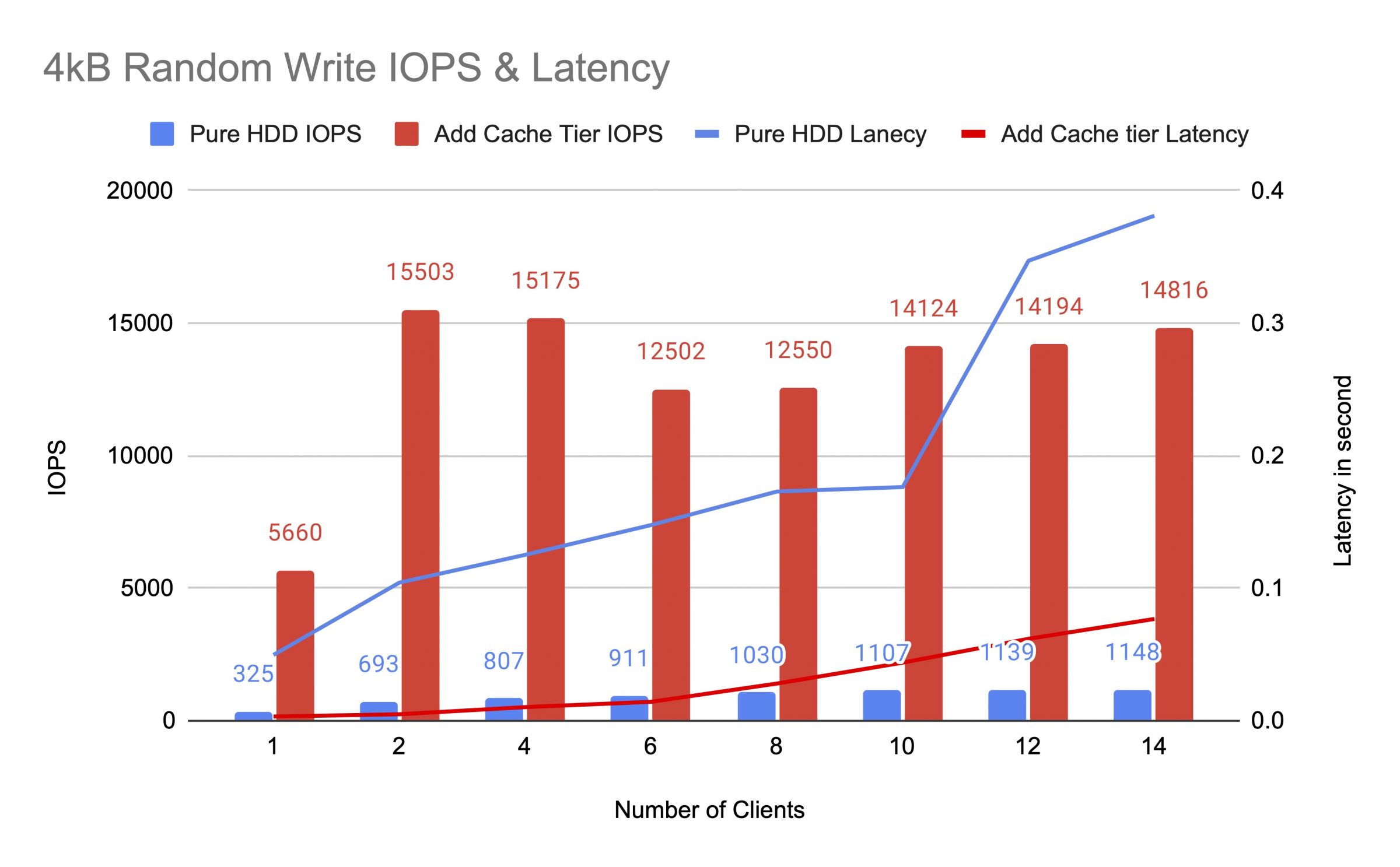

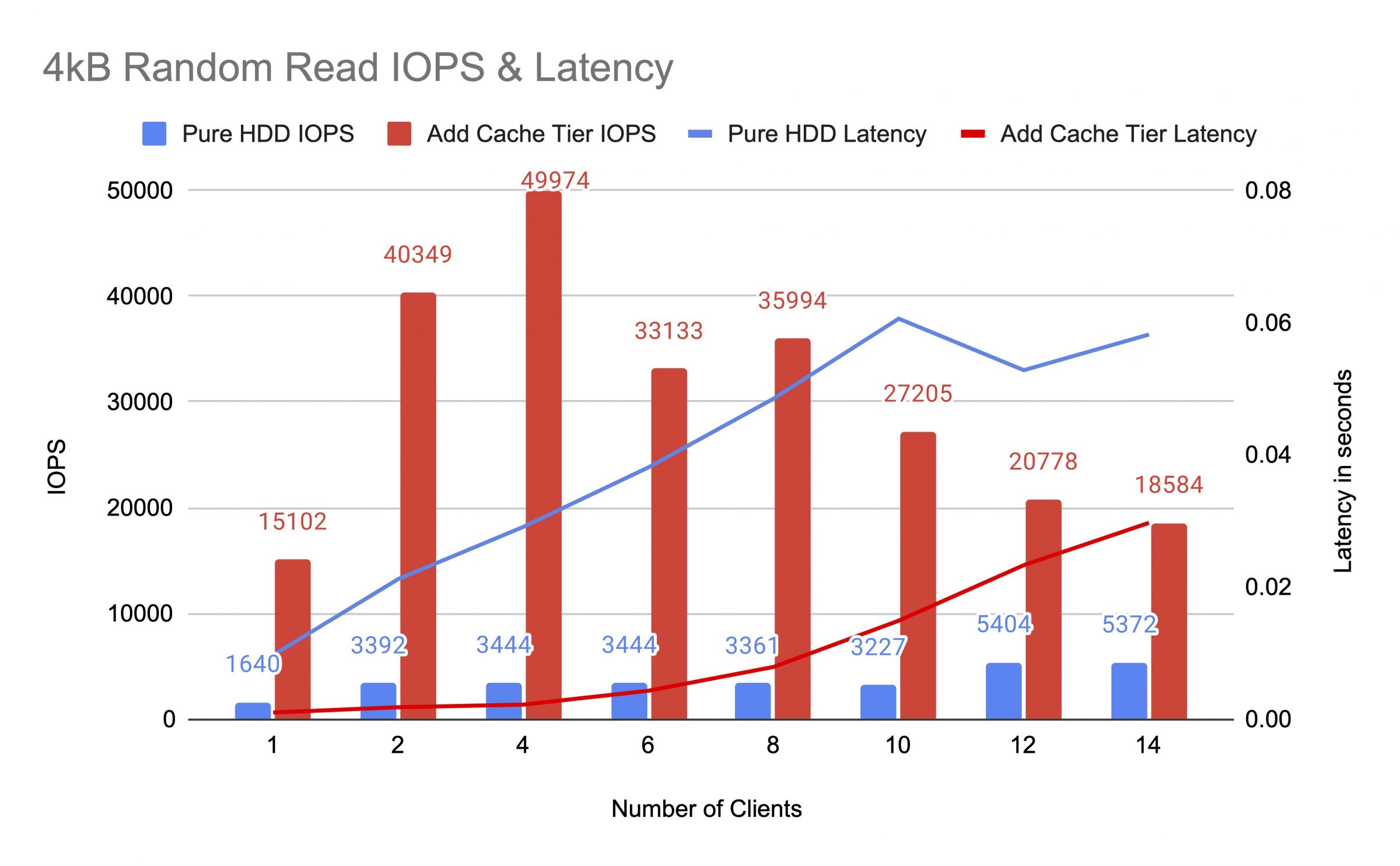

We tested the HDD pool before and after adding the cache tier, using up to 14 clients to generate test loads. Each client mounted an RBD for the fio test. The test load started with one client and increased the number of clients after each test job was completed. Each test cycle lasted five minutes and was automatically controlled by Jenkins. The performance of a test job was the sum of all the clients' results. Before testing the cache tiering, we wrote data to the RBDs until the cache tier pool was filled over the ceph cache target full ratio (0.8).

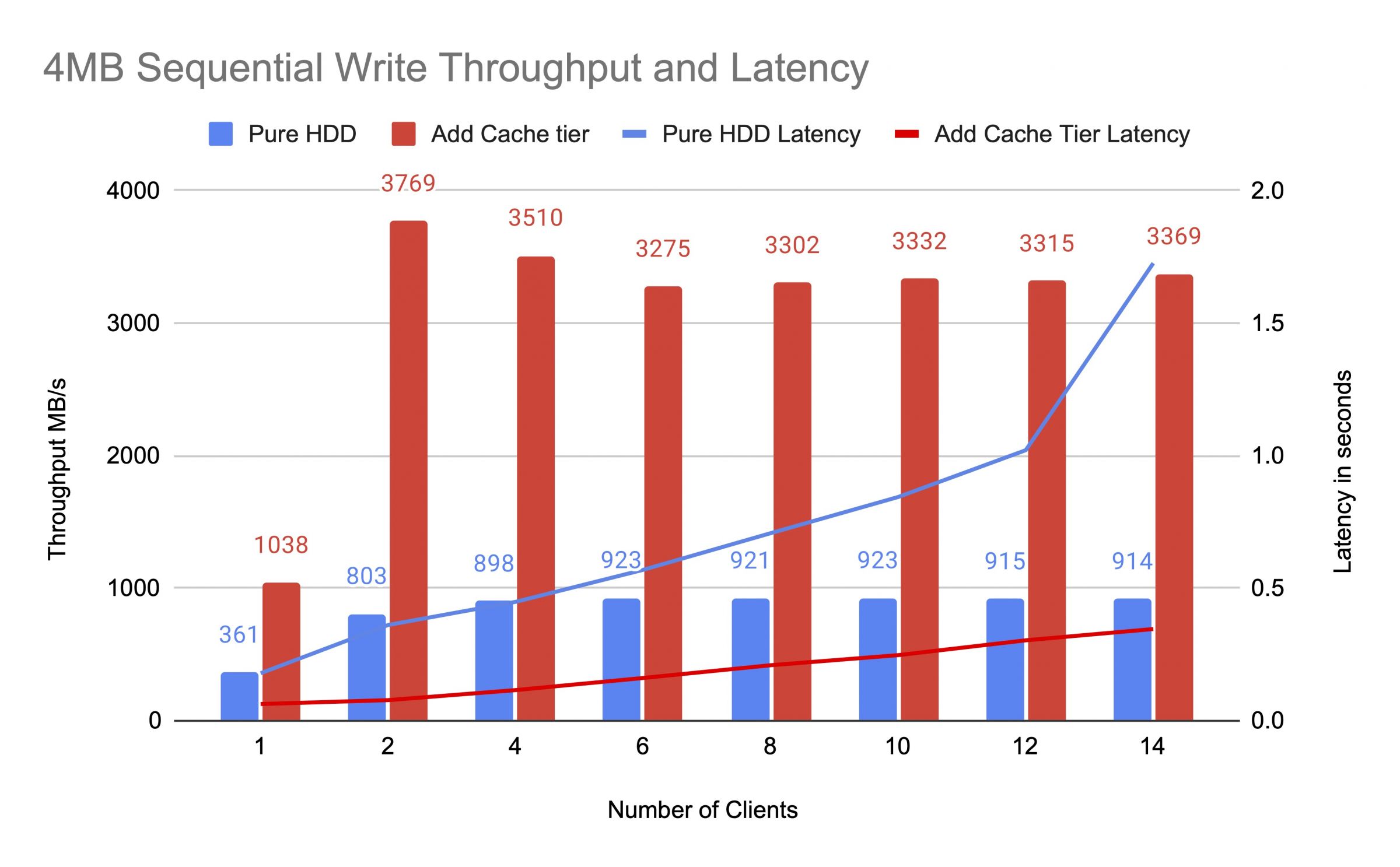

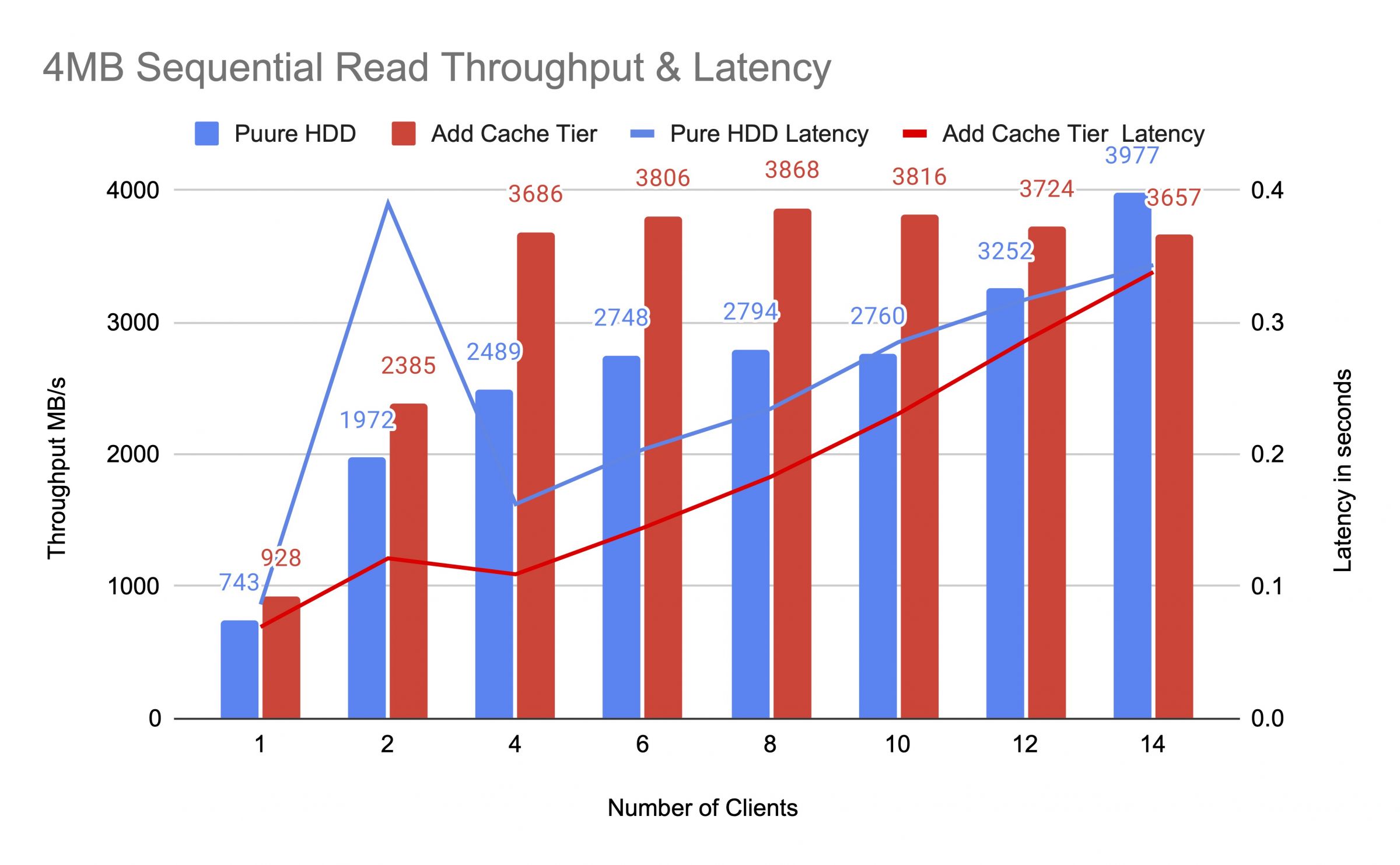

The diagrams showed that the performance of the pure HDD pool was significantly enhanced after adding an NVMe cache pool.

During the cache tier test, we observed pool stats using the ceph osd pool stats command. The cache and base pools had flushing, evicting, and promoting activities. We captured pool stats at different times during the cache tier test.

Data was written to the cache

pool cache id 84

client io 21 MiB/s wr, 0 op/s rd, 5.49k op/s wr

pool mars400_rbd id 86

nothing is going on

Cache was doing promote and evict

pool cache id 84

client io 42 MiB/s wr, 0 op/s rd, 10.79k op/s wr

cache tier io 179 MiB/s evict, 17 op/s promote

pool mars400_rbd id 86

client io 0 B/s rd, 1.4 MiB/s wr, 18 op/s rd, 358 op/s wr

Cache was flushing

pool cache id 84

client io 3.2 GiB/s rd, 830 op/s rd, 0 op/s wr

cache tier io 238 MiB/s flush, 14 op/s promote, 1 PGs flushing

pool mars400_rbd id 86

client io 126 MiB/s rd, 232 MiB/s wr, 44 op/s rd, 57 op/s wr

PG was evicting

pool cache id 84

client io 2.6 GiB/s rd, 0 B/s wr, 663 op/s rd, 0 op/s wr

cache tier io 340 MiB/s flush, 2.7 MiB/s evict, 21 op/s promote, 1 PGs evicting (full)

pool mars400_rbd id 86

client io 768 MiB/s rd, 344 MiB/s wr, 212 op/s rd, 86 op/s wr

PG Flushing and client IO direct to base pool. (clients were writing data)

pool cache id 84

client io 0 B/s wr, 0 op/s rd, 1 op/s wr

cache tier io 515 MiB/s flush, 7.7 MiB/s evict, 1 PGs flushin

pool mars400_rbd id 86

client io 613 MiB/s wr, 0 op/s rd, 153 op/s wr

After the continuous test, we rested the cluster for hours and redid the 4 kB random write test. We got a much better performance. This was because the cache space was released for the new writing.

From this test, we are sure that using the NVMe pool as the cache tier of an HDD pool can achieve significant performance improvement.

It should be noted that the performance of cache tiering cannot be guaranteed. The performance depends on the cache hit condition at that moment, and the same performance cannot be achieved by repeating tests with the same configuration and workloads.

If your application needs consistent performance, use a pure NVMe SSD pool.

- Related Products

Mars500 NVME All Flash Ceph Storage Appliance

Mars 500

Mars 500 Ceph Appliance is designed to meet high-performance cloud-native data storage needs. It utilizes the latest NVMe flash technology to provide a turnkey...

DetailsMars 400PRO Ceph storage appliance

Mars 400PRO

Mars 400 Ceph Appliance is designed to meet high capacity cloud-native data storage needs. It utilizes HDD to benefit from low cost per TB. Mars 400 provides...

Details